Latest Posts

-

Dropout supported in NeuralNetwork class

Adding support for dropout in NeuralNetwork class

I added support for dropout in NeuralNetwork class so that you can avoid overfitting during training. Just specify the dropout ratio when you add a layer using the ‘dropout’ argument:

model = Model(num_input=28*28) model.add(Layer(1024, activation=af.RELU, dropout=0.5)) model.add(Layer(512, activation=af.RELU, dropout=0.5)) model.add(Layer(10, activation=af.SIGMOID))See MNIST Fashion example for the complete example. Accuracy for the above script was 88.84% with 20 epochs.

Dropout ratio is the ratio of units that you want to keep. Therefore if you specify 0.8, 20% of units are randomly dropped. In actual implementation, it’s a little more complicated. If you want to have a look how it’s implemented, see the actual code.

I used this paper as the reference, but any mistake in the implementation is mine.

-

Video sample of using YOLO to detect objects

YOLO

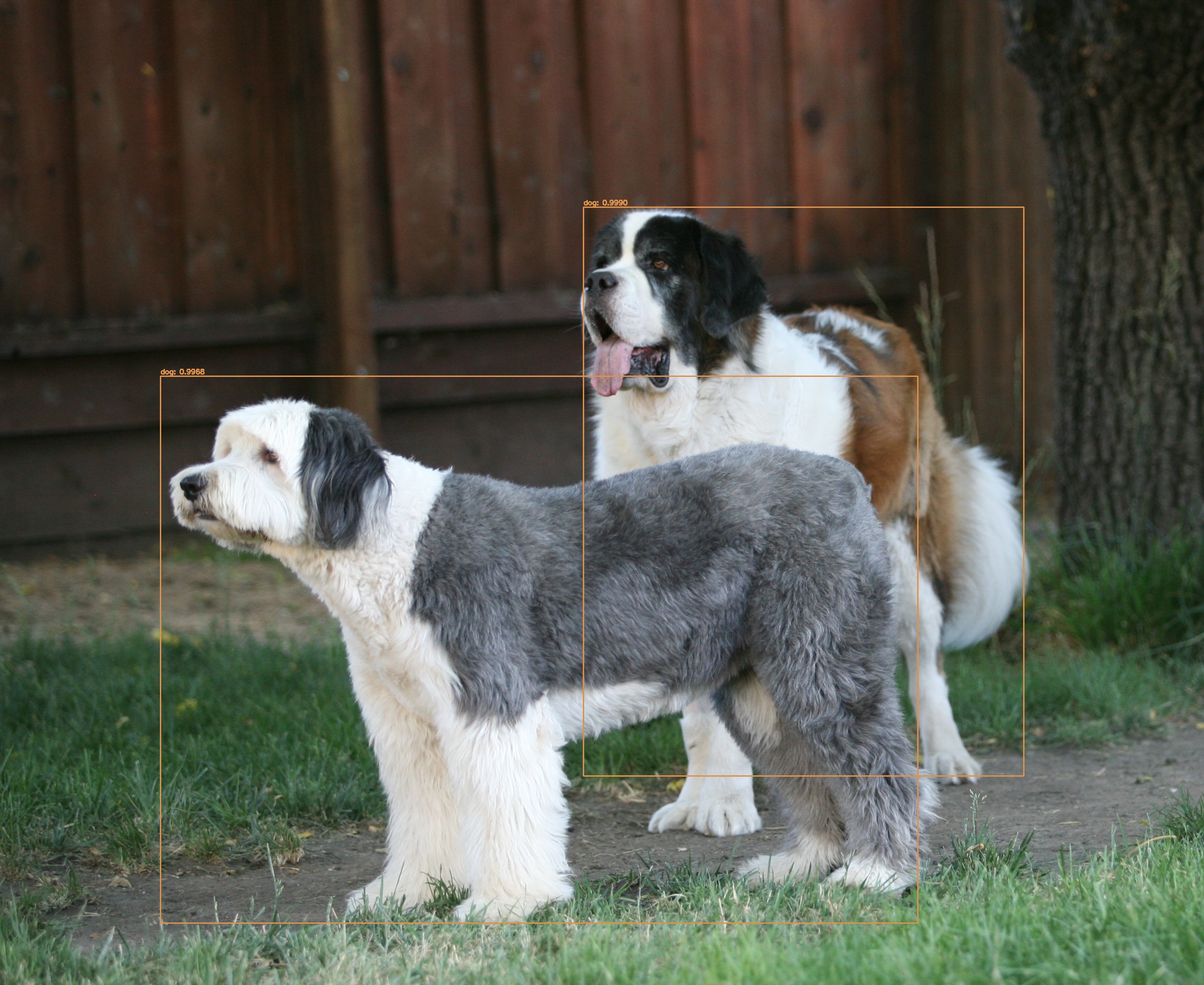

YOLO is one of the popular methods to detect objects in an image. Object detection means that the detector provides the coordinate of each object detected in a photo in addition to the label of each object.

Below photos show how YOLO identified my dogs’ positions in each photo indicated by bounding boxes:

Maddie and Olivia

Aimée (the big puppy ;-)

I created a a short video clip to demo how YOLO processes traffic on a city street. I took a video of Taylor St in downtown San Jose and ran it through YOLO to mark objects’ location.

Here are the steps that you want to follow if you want to do this yourself:

- Prepare a video

- Capture a video or find a video you want to use for object detection.

- Extract frames from the video (e.g. using ffmpeg)

- Go to the YOLO developer’s main website. Follow instructions on the website:

- git clone the source code

- Download pre-trained weights file

- Build using make

- Run an example program

- Go to pyimagesearch.com and download the example Python code to use YOLO with OpenCV’s neural network module. The site has detailed instructions for the example.

- Copy three files from your local YOLO installation to a directory under the example code

- coco.names

- yolov3.weights

- yolov3.cfg

- Make sure that the example code works.

- Tweak the code to read video frame files and output frame files.

- Run the script

- Copy three files from your local YOLO installation to a directory under the example code

- Combine frames to a video (e.g. using ffmpeg again)

- Prepare a video

-

Convolutional neural network accuracy against MNIST digit data

I have been working on building my own neural network framework since Nov. Lately I have been focusing on implementing convolutional neural network ("CNN") to add to the framework. Initially I built the CNN with a back of the envelope-type of calculation and it didn't work at all. So I spent two days calculating and documenting the result which I posted earlier. At least for me, it is very hard to debug a neural network framework. This is because when neural network doesn't work, it could be due to hyperparameters, specific values weights were initialized for the session, or one or more bugs in my framework itself. After spending significant amount of time debugging with a fear that I may not be able to get this to work, I finally found a few bugs in my framework. Fixed them resolved the problem of loss not going down. I ran a 4-epoch training session against MNIST digit data overnight, and woke up to find the accuracy was 97.95%. If you use a pre-built neural network framework, I believe the accuracy number could go higher. However this is a number that I feel comfortable with declaring a victory for my project. If you want to try it, this is the specific script: CNN MNIST Python script.

-

Calculate back propagation for convolutional neural network

I'm in the middle of adding convolutional neural network to my machine learning framework that I built from scratch using numpy. I documented how I calculate back propagation in the following two pages:

For now, I'm setting the stride to 2 on a conv layer instead of using a max pooling layer to down-sample data therefore I'm covering calculation for this specific use case. Please note that CNN implementation in the repo is being debugged, and is not ready for the prime time yet. As soon as I'm done, I'll post an update. -

Parametric curve

A parametric curve is a very fascinating feature in math.